The Workflow Pattern

Over the past months I've finally started experimenting with event sourced workflows, a topic I had been contemplating the last couple of years. I've taken inspiration from other products and projects, such as AWS Step Functions, GCP Workflows, Temporal, Camunda, NServiceBus and MassTransit to name a few. I do want to point out that, if you're already heavily invested into any of these, I'm not trying to deter you from using them. After all, a simple pattern can't cover the breadth of a product. If you're applying event sourcing, then the following pattern may prove to be a useful stepping stone.

Why did I decide to climb this particular hill? Well, on the one hand, I'm not particularly overwhelmed by the workflow authoring experience some products offer. Granted, this may be a point of contention. On the other hand, there are not a lot of examples out there on how to model an event sourced workflow. But more importantly, it was an itch and scratching was long overdue.

What's a workflow anyway? For the purpose of this blog post, I'm going to position it as the automation of a process (or part of), which we can not accomplish in a single transaction for one reason or another. Those reasons may be either technical or functional, or both. In this context, workflows are more akin to process managers than sagas, meaning a central unit that orchestrates. In my experience most workflows can be modelled as state machines or state charts. The ability to visualize those state machines in turn helps us see the bigger picture and spot deficiencies in our design more easily.

Where do workflows sit? Sometimes at the edges of, other times inside of a service or bounded context or module, however you partitioned your system. This is also the reason why their codified form may only be a small part of the overall process we're trying to automate. Central unit does not automatically mean centralize the whole process. If we were to do that, we would bring ourselves in the awkward position of not knowing where to place it. Instead multiple workflows, each one in its proper place, can, together, automate the overall process, to whatever degree we deem useful. At the edges they can observe both the outside and inside and mediate between them, sometimes acting as a slayer of corruption, other times as a translator from Babylon.

What's the purpose of a workflow? To make decisions that help move the overall process along. Each decision causes a state transition, meaning it goes from its current state to the next state, even if that next state is the same state as the current state. This is acceptable, since we only make those states explicit that have a particular relevance to moving the process along. Well-designed workflows have a beginning, a middle and an ending. Unless they are really simple, it's recommended to zoom in on each of those stages in a workflow's lifecycle. That implies something causes them to begin, to continue to be, and to end. In state machine speak, we call the things that cause transitions triggers. Not surprisingly, messages make for good trigger candidates.

Most workflows I encounter in existing implementations are more like riddles. Hidden as columns or properties ending with a suffix called

StatusorState, often havingActive,Inactive,Pending,InProgressas values. Mingled with other boolean columns and properties, they indicate we're in a certain state of the machine, thus somewhere along in the process. Does this sort of implementation sound familiar? I'm sure it does for some of you. It baffles me how well versed domain experts and their proxies have become in this sort ofcolumnspeak. The meaning of the words used as values has to be explained over and over again because they are in effect weasel words. It's as if they've forgotten how to articulate a process without leaning on the current solution as a crutch. All the more reason to make the implicit explicit, if you ask me.

When it comes to implementing workflows there are quite a few problems that need to be tackled:

- How is a workflow initiated and how do you know which workflow to initiate?

- How will messages be delivered to a workflow?

- How are messages correlated to a workflow?

- What does a workflow need to remember, how and where do we store that memory, how do we correlate that memory back to a workflow?

- How and when are the decisions taken by a workflow executed?

- How can we observe workflow execution behavior?

This list is not exhaustive by any means but already covers some interesting problems. Most of these can be considered generic, that is, the solution is the same regardless of which workflow we're trying to implement. The components that take care of these problems are often highly reusable and not surprisingly, products, libraries and frameworks are born to solve them. With that in mind, the part that's left is the authoring experience for developers.

Explaining workflows in the abstract is one thing, yet binding it to concrete examples is much more useful. So before we continue, I have to tell you a true story. Well, more like a confession 😉

I recently received a letter from the police with an amicable settlement for a traffic violation I committed. It's a rare phenomenon, me breaking a speed limit, but it did happen on this particular occasion. The car the infraction was committed with is registered to my company, which explains why the letter was addressed to my company instead of my person. In Belgium you've got 15 days to identify who drove the car at the time of the infraction - they can't make any assumptions - or, if you don't know who drove it, who is responsible for the car. Failing to do so causes you to incur a higher fine or being prosecuted. The reason this is required is because the infraction has to be added to someone's criminal record. Once the driver is identified, they in turn will be invited to pay the fine. You can still choose to pay as a company or have the driver pay. You can choose the order in which you perform payment and driver identification. You can ask for a payment plan but you have to realize it's limited to 6 months. If you don't pay in time, you'll get payment reminders but the fine itself will increase with each reminder. You can contest the fine and have this dispute accepted or rejected. If the dispute is rejected, the procedure continues as though it wasn't contested - you just have to pay the fine. Not paying causes all kinds of escalations that would have me stray too far off topic.

There's plenty of possible workflows in the above narrative, if you ask me. I've taken some liberty in how I've modelled them, so bear with me.

At the edges of the subsystem that deals with traffic fines lives a workflow that's observing the police's reporting subsystem.

flowchart TB

InputChannel([Input Channel])

OutputChannel([Output Channel])

OutputChannel--Police Report Published-->InputChannel

subgraph Police Reporting System

PoliceReport--Police Report Published-->OutputChannel

end

subgraph Traffic Fine System

InputChannel--Police Report Published-->IssueTrafficFineForSpeedingViolationWorkflow

end

Its job is to make sure that police reports that report a speeding violation cause a traffic fine to be issued to the subject to whom the vehicle is registered.

stateDiagram-v2

state IsSpeedingViolationChoice <<choice>>

[*] --> IsSpeedingViolation: PoliceReportPublished

IsSpeedingViolation-->IsSpeedingViolationChoice

IsSpeedingViolationChoice-->AwaitingSystemNumber: [yes]\n / Send(GenerateTrafficFineSystemNumber)

IsSpeedingViolationChoice-->[*]: [no]\n / Complete

AwaitingSystemNumber-->AwaitingManualIdentificationCode: TrafficFineSystemNumberGenerated / Send(GenerateTrafficFineManualIdentificationCode)

AwaitingManualIdentificationCode-->[*]: TrafficFineManualIdentificationCodeGenerated\n / Send(IssueTrafficFine), Complete

Not all police reports are about speeding violations. For simplicity sake, I've kept it to one offense per police report. If there's no speeding violation, we simply complete the workflow immediately, signaling that it is done. If it does have a speeding violation as an offense, we need to generate a number that uniquely identifies the traffic fine we're about to issue in the rest of the system. Because the people to whom the car is registered may not have a digital identity - that's a thing in Belgium - or simply do not want to use their digital identity, we also need to generate a manual identification code, which, together with the police report and traffic fine number, can be used to access the website where you can view, pay and contest your traffic fine. Once all that's done, we can issue the traffic fine and complete the workflow. Nothing too complicated, in my opinion.

This all largely happens within the Traffic Fine System. There's a perpetual motion of messages flowing in and out of the workflow as depicted below. You can enlarge the image by clicking it and follow the sequence numbers.

flowchart TB

IssueTrafficFineForSpeedingViolationWorkflow[Issue\nTraffic Fine\nFor\nSpeeding Violation\nWorkflow]

InputChannel([Input Channel])

GenerateTrafficFineSystemNumberTopic([Generate\nTraffic Fine\nSystemNumber\nTopic])

GenerateTrafficFineManualIdentificationCodeTopic([Generate\nTraffic Fine\nManual Identification Code\nTopic])

IssueTrafficFineTopic([Issue\nTraffic Fine\nTopic])

subgraph Traffic Fine System

direction TB

InputChannel--"(1)Police Report\nPublished"-->IssueTrafficFineForSpeedingViolationWorkflow

IssueTrafficFineForSpeedingViolationWorkflow--"(2)Send(Generate\nTraffic Fine\nSystem Number)"-->GenerateTrafficFineSystemNumberTopic

GenerateTrafficFineSystemNumberTopic--"(3)Generate\nTraffic Fine\nSystem Number"-->TrafficFineSystemNumberGenerator

TrafficFineSystemNumberGenerator--"(4)Traffic Fine\nSystem Number\nGenerated"-->InputChannel

InputChannel--"(5)Traffic Fine\nSystem Number\nGenerated"-->IssueTrafficFineForSpeedingViolationWorkflow

IssueTrafficFineForSpeedingViolationWorkflow--"(6)Send(Generate\nTraffic Fine\nManual Identification Code)"-->GenerateTrafficFineManualIdentificationCodeTopic

GenerateTrafficFineManualIdentificationCodeTopic--"(7)Generate\nTraffic Fine\nManual Identification Code"-->TrafficFineManualIdentificationCodeGenerator

TrafficFineManualIdentificationCodeGenerator--"(8)Traffic Fine\nManual Identification Code\nGenerated"-->InputChannel

InputChannel--"(9)Traffic Fine\nManual Identification Code\nGenerated"-->IssueTrafficFineForSpeedingViolationWorkflow

IssueTrafficFineForSpeedingViolationWorkflow--"(10)Send(Issue\nTraffic Fine)"-->IssueTrafficFineTopic

IssueTrafficFineTopic--"(11)Issue\nTraffic Fine"-->TrafficFine

end

Let's see what that could look like in code. I've picked F# for this purpose, leaning on its terseness, but honestly, this can be expressed in just about any programming language in a very similar way.

module IssueTrafficFineForSpeedingViolationWorkflow =

let decide message state =

match (message, state) with

| PoliceReportPublished m, Initial ->

match m.Offense with

| SpeedingViolation _ ->

[ Send(

GenerateTrafficFineSystemNumber {

PoliceReportId = m.PoliceReportId }) ]

| _ -> [ Complete ]

| TrafficFineSystemNumberGenerated m,

AwaitingSystemNumber s ->

[ Send(

GenerateTrafficFineManualIdentificationCode

{ PoliceReportId = s.PoliceReportId

SystemNumber = m.Number }

) ]

| TrafficFineManualIdentificationCodeGenerated m,

AwaitingManualIdentificationCode s ->

[ Send(

IssueTrafficFine

{ PoliceReportId = s.PoliceReportId

SystemNumber = s.SystemNumber

ManualIdentificationCode = m.Code }

)

Complete ]

| _, _ ->

failwithf "%A not supported by %A" message state

Even if you're not familiar with F# and its pattern matching, the translation from the state diagram into its code counterpart should be fairly intuitive.

- This workflow is initiated by the

PoliceReportPublishedmessage. Nothing happened yet so we must be in theInitialstate of the workflow. If the offense on the police report is aSpeedingViolation, we go ahead and decide toSendaGenerateTrafficFineSystemNumbermessage. If it's aParkingViolation, we decide to immediatelyCompletethe workflow. - We then wait for the system number to be generated. Once that happens, the workflow is continued by the

TrafficFineSystemNumberGeneratedmessage and it appears we've somehow moved onto theAwaitingSystemNumberstate. The only decision to take, now that we know the system number, is toSendaGenerateTrafficFineManualIdentificationCodemessage. - No surprise here, we wait for that manual identification code to be generated. Again, once that happens, the workflow is continued by the

TrafficFineManualIdentificationCodeGeneratedmessage and we've moved onto theAwaitingManualIdentificationCodestate. - Our final decision is to

SendtheIssueTrafficFinemessage and toCompletethe workflow. If we encounter a transition our state machine does not understand, we can either ignore it by returning no decisions or fail hard and complain about it, which is what we've done here.

Why don't we pause here for a minute. There's something important to point out. That is, our workflow takes decisions. But it doesn't actually perform the decisions taken. Making sure those decisions get executed is a delegated responsibility. Its decision logic is side effect free and can be implemented as a pure function. This property is not to be underestimated. Testing the workflow's decision logic becomes fairly straightforward. All you need is a message and some state to check whether the actual decisions taken match the ones you expected to be taken. If we generalize the signature of our workflow's decide function we get something along the lines of this:

type Workflow<'TInput,'TState,'TOutput> = {

Decide: 'TInput -> 'TState -> WorkflowCommand<'TOutput> list

}

It takes an input message and the current state it is in, and produces a list of decisions. For now, with what we've seen so far, that WorkflowCommand - what I've called decision up until now - is fairly simple. We either decide to send a message or to complete the workflow.

type WorkflowCommand<'TOutput> =

| Send of message: 'TOutput

| Complete

Not all workflows are created equal. Some may also publish an event, schedule a timeout message to themselves, or reply to whomever sent the input message to the workflow, or any mixture of those. The point is to only include those decisions any of your workflows are going to take. The set of decisions, the sending, publishing, replying, scheduling, completing, etc ... it is finite and that's a good thing. It means we can start thinking about generalizing it.

type WorkflowCommand<'TOutput> =

| Reply of 'TOutput

| Send of 'TOutput

| Publish of 'TOutput

| Schedule of after: TimeSpan * message: 'TOutput

| Complete

When the workflow is about to begin, meaning it's about to receive its first message and take its first decisions, there's no previous state we know of. So we have to tell what that initial state is, that is the state it is in when we start out. The fact I called it Initial in the IssueTrafficFineForSpeedingViolationWorkflow above is purely happenstance. I could have called it AwaitingPoliceReportWithPossibleSpeedingViolation instead. For simplicity sake, I've encoded it into the workflow itself as InitialState, because, well, it has to be encoded somewhere for workflow surrounding code to be able to find and use it. This way, when calling the decide function for the first time, we know what initial state to pass in.

type Workflow<'TInput,'TState,'TOutput> = {

InitialState: 'TState

Decide:

'TInput

-> 'TState

-> WorkflowCommand<'TOutput> list

}

Often there's a desire to encapsulate information next to the actual message we receive as input. This often goes by a name ending with the word Context in a lot of other products. Here I called it WorkflowTrigger. It can be useful at times if the workflow surrounding code treats the head and body of a message as distinct parts, but it's not strictly necessary. The workflow trigger could have properties which we tend to classify as metadata or headers, e.g. source, message name, message version, causation and correlation identifiers, ... next to the body. The main motivation to use this is when the workflow code itself uses it as part of its decision making process. If not, then it's just noise.

type Workflow<'TInput,'TState,'TOutput> = {

InitialState: 'TState

Decide:

WorkflowTrigger<'TInput>

-> 'TState

-> WorkflowCommand<'TOutput> list

}

Another missing piece of the puzzle that probably had you 🥁 ... puzzled is how we actually moved thru the state machine. There's an additional function that takes care of that.

module IssueTrafficFineForSpeedingViolationWorkflow =

let evolve state message =

match (state, message) with

| Initial, InitiatedBy(PoliceReportPublished m) ->

match m.Offense with

| SpeedingViolation v ->

AwaitingSystemNumber {

PoliceReportId = m.PoliceReportId }

| ParkingViolation v -> Final

| AwaitingSystemNumber s,

Received(TrafficFineSystemNumberGenerated m) ->

AwaitingManualIdentificationCode {

PoliceReportId = s.PoliceReportId

SystemNumber = m.Number }

| AwaitingManualIdentificationCode _,

Received(TrafficFineManualIdentificationCodeGenerated _) ->

Final

| _, _ ->

failwithf "%A not supported by %A" message state

Again, it's a fairly straightforward translation from the state diagram. We start out in the Initial state, the solid sphere on the diagram. What name you want to give this state is totally up to you - whatever makes most sense. Receiving or rather, being initiated by a PoliceReportPublished message, in this state causes it to transition either to the Final state, when no speeding violation, or the AwaitingSystemNumber, when there's a speeding violation. Not only do we transition to the next state, we also copy any necessary data we need to keep track of onto the next state. This data can come from the message, the previous state or simply by virtue of transitioning. Receiving TrafficFineSystemNumberGenerated moves us from AwaitingSystemNumber to AwaitingManualIdentificationCode and, finally, receiving TrafficFineManualIdentificationCodeGenerated moves us from AwaitingManualIdentificationCode to the Final state. If we encounter a transition our machine does not understand, we can either ignore it by returning the same state or fail hard and complain about it, which is what we've done here. Our workflow signature can now cater for the evolve function too.

type Workflow<'TInput,'TState,'TOutput> = {

InitialState: 'TState

Evolve: 'TState -> WorkflowEvent<'TInput, 'TOutput> -> 'TState

Decide: 'TInput -> 'TState -> WorkflowCommand<'TOutput> list

}

So the evolve function takes a previous state and an event, and produces the next state, which may just well be the same state. But what's that WorkflowEvent<'TInput, 'TOutput> you have there? Well, evolving the state of a workflow should be able to observe both the past received input and past taken decisions. WorkflowEvent<'TInput, 'TOutput> is what embodies that. In the above evolve the workflow state was only moved based on workflow input, but that's just a coincidence.

type WorkflowEvent<'TInput, 'TOutput> =

| Began

| InitiatedBy of 'TInput

| Received of 'TInput

| Replied of 'TOutput

| Sent of 'TOutput

| Published of 'TOutput

| Scheduled of after: TimeSpan * message: 'TOutput

| Completed

Using this construct, we can start telling the story of a typical workflow execution. Do note that I've put the messages on a diet for legibility reasons.

[

Began

InitiatedBy(PoliceReportPublished {

PoliceReportId = "XG.96.L1.5000267/2023"

Offense = SpeedingViolation { MaximumSpeed = "50km/h" } })

Sent(GenerateTrafficFineSystemNumber {

PoliceReportId = "XG.96.L1.5000267/2023"})

Received(TrafficFineSystemNumberGenerated {

PoliceReportId = "XG.96.L1.5000267/2023"

Number = "PPXRG/23TV8457" })

Sent(GenerateTrafficFineManualIdentificationCode {

PoliceReportId = "XG.96.L1.5000267/2023"

SystemNumber = "PPXRG/23TV8457" })

Received(TrafficFineManualIdentificationCodeGenerated {

PoliceReportId = "XG.96.L1.5000267/2023"

Number = "PPXRG/23TV8457"

Code = "XMfhyM" })

Sent(IssueTrafficFine {

PoliceReportId = "XG.96.L1.5000267/2023"

SystemNumber = "PPXRG/23TV8457"

ManualIdentificationCode = "XMfhyM" })

Completed

]

By now it should start to dawn on you how event sourcing fits into all of this. Using WorkflowEvent<'TInput, 'TOutput> we can build up a stream of events around the workflow: when it began, what message initiated it, which decisions were taken, how more messages we've received continued it and more decisions were taken, until finally it completed. Past tense is great for evolving and describing history, but I prefer imperative for decision making, which is why WorkflowCommand<'TOutput> is there. While it's a great authoring tool, it's a transient concept nonetheless. It's trivial to translate between the two since it's mainly a matter of knowing whether we're beginning the workflow and turning the imperative (a workflow command) into past tense (a workflow event). By piping all messages and their accompanying decisions, i.e. our workflow commands, thru this translation, we get the entire history of a workflow as a sequence of events.

let translate begins message commands =

[

if begins then

yield Began

yield InitiatedBy message

else

yield Received message

for command in commands do

yield

match command with

| Reply m -> Replied m

| Send m -> Sent m

| Publish m -> Published m

| Schedule (t, m) -> Scheduled (t, m)

| Complete m -> Completed m

]

Note that, if you are using EventStoreDB, you could use the expected stream revision or the absence of a stream, denoted by StreamRevision.None, as the value of begins. You usually obtain this value when you try to read the stream and use the stored events to evolve the workflow.

Above the choice was made not to bother the workflow author with having to explicitly indicate whether or not the workflow began and which message caused it to be initiated. This is a choice and trade off. Making it part of the decision making simply moves it into the authoring experience. That would work too, and just like for Complete, you must not forget to Begin as part of receiving the initial message. Stitching a Received message in the history becomes a tad trickier and human error starts lurking. The minor difference between Received and InitiatedBy is again a design choice. I've gone back and forth on these sort of things. It's good to play with the subtle differences in a design to see where they take you.

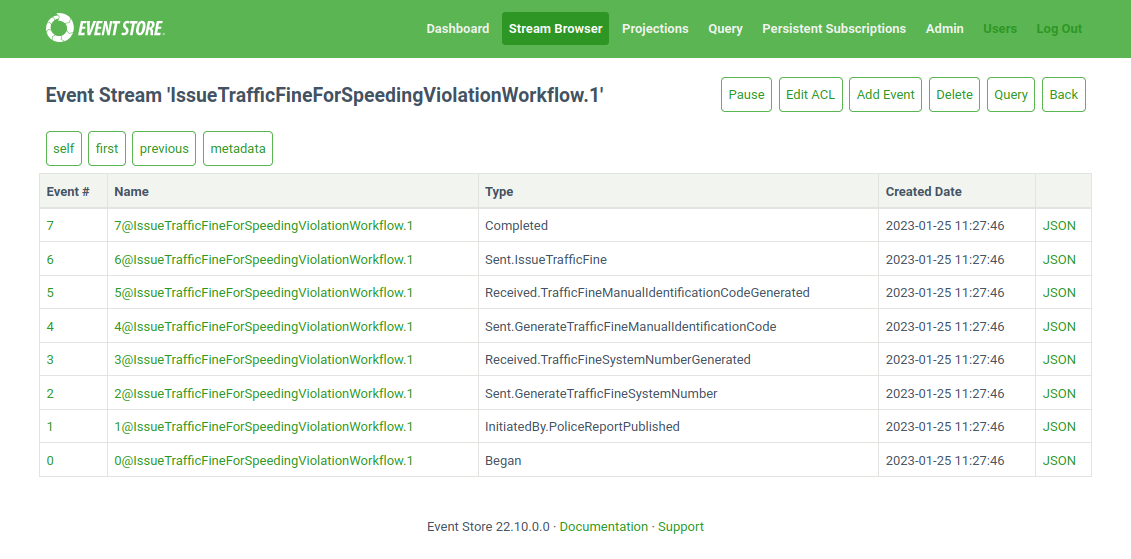

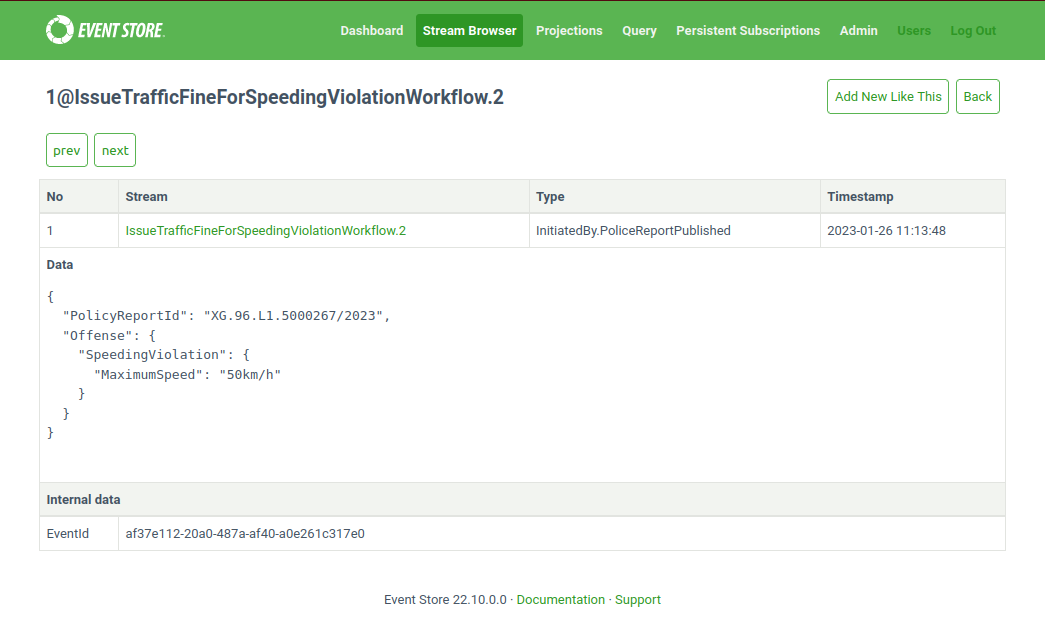

Now, with that in place and the rather mundane task of encoding input and output messages as json serialized events, it's not that hard to get to a working implementation in EventStoreDB, as shown below.

What stream name format to pick for a workflow, how we encode the workflow events, it's all a matter of choice. There is no real difference with other event sourced entities in this regard. Encoding the workflow event in the event type caters for a nice form of readability, in my opinion. We could have made the intent, that is to send, to publish, to schedule, etc. part of the meta data of an event instead. By all means, bring your own conventions.

One thing to watch out for is that workflow streams may have copies of messages that originate from other streams. Without proper precautions in place your projections and other consumers may start observing the same messages twice. You could teach them to ignore workflow streams, you could make sure the event type does not match, etc ... fair warning, that's all.

At this point, there may be a few concerns.

We're using past tense to indicate that a message has been sent, published, scheduled, etc. but in reality, that sending, publishing, scheduling, etc. has not taken place yet. When I read a

decidefunction, I can reason about the decisions it's taking using the imperative. Yet as soon as I exit thatdecidefunction, it's a done deal, as far as authoring goes at least. I'm now leaning on other parts of the system to actually do the persisting, sending, publishing, scheduling, replying, etc ... and they should not fail for reasons other than technical ones. If we take the perspective of the workflow itself, past tense makes sense. As far as it is concerned, those decisions have happened. If the decisions don't get persisted atomically, we'll retry at a later time or that input message may well end up getting dead-lettered in some way. Now, if the decisions decided do not get carried out, are never carried out, or we suspect the potential for that to happen is there, we should lean on scheduling timeouts for a workflow or make reporting failure back to a workflow explicit. Additionally, we can observe and monitor workflow streams for the one's thatBeganbut neverCompletedwithin a reasonable amount of time. This amount of time may be very different from one workflow to another, from one domain to another. Using telemetry may be another angle by which to observe the behavior of our workflows.Choosing to persist a workflow's events rather than its commands is a choice. We could ditch the entire concept of a workflow event and simply persist the workflow commands. We could ditch the entire concept of a workflow command and go straight for workflow events while authoring. Having these sort of choices to make is great, just make sure they don't paralyze you.

Why do we have a difference between the input and output messages? After all, there may be overlap between them. For example, scheduling an output message to oneself after a time will cause that message to appear as input to the same workflow. We could entertain the idea of unifying the input and output messages into one set of messages that the workflow either receives or emits. It simplifies encoding somewhat, but it obviously makes it less clear which messages serve as input or output.

The words command and event may be used in a way that interferes with what they mean in other areas where you apply event sourcing. Examples? A workflow command to publish an event. A workflow event that indicates a command or query was sent. It's best to view things from the workflow's perspective and to not look too closely at the tense of the message a workflow command carries. Doing anything else is going to hurt your brain.

Consequently, the type of message that triggers a workflow is deliberately unconstrained and neither is the type of message used as payload of a decision. Whether a workflow gets triggered by an event, a command, a query, a document or a query response does not materially affect the authoring or its design. There really is no need for any sort of artificial translation or shoehorning. For decisions, it makes sense for the payload to match the semantics of the workflow command, be it sending, publishing, replying, scheduling, ... if only for legibility reasons. There's something quite liberating about letting go.

There's no addressing. We're not specifying where to send or publish to, in the workflow's

decidefunction itself. This is intentional. The name of the message determines its destination. If that's not sufficient, I'd entertain the idea of adding logical endpoints as a destination toSendorPublishto, but never physical endpoints. It'd be fairly insidious to make assumptions about the stability of physical endpoints over the course of time, since the whole point is to keep the history of a workflow persisted as a sequence of events. The map between the logical and physical endpoints could live in code or configuration.

And ... Action!

With our workflow events now persisted, our stream itself has developed an interesting property. Not only can we use it to rebuild our workflow's state, by replaying (also known as folding) all workflow events in it using our evolve function, but it effectively acts as an outbox for all the workflow commands that have been written as workflow events. We can use persistent or catch-up subscriptions on top of those streams to have a workflow event processor carry out the workflow commands. Given that the workflow commands are generic, there is a high chance their execution can be generalized. When starting out with this pattern, however, it can be useful to postpone this generalization and get to grips with what carrying out the workflow commands means.

As an example, assuming we've set up a topic per message type in Google's Cloud Platform, our processor could publish each workflow send command message to its corresponding topic, as shown below. The repetition is fairly easy to spot.

module IssueTrafficFineForSpeedingViolationWorkflowProcessor =

let private options =

JsonFSharpOptions

.Default()

.WithUnionExternalTag()

.WithUnionUnwrapRecordCases()

.ToJsonSerializerOptions()

let private envelop workflow_id message_id m =

let json = JsonSerializer.Serialize(m, options)

let envelope =

PubsubMessage(Data = ByteString.CopyFromUtf8(json))

envelope.Attributes.Add("workflow_id", workflow_id)

envelope.Attributes.Add("message_id", message_id)

envelope

let handle clients workflow_id message_id command =

task {

match command with

| Send(GenerateTrafficFineSystemNumber m) ->

let envelope = envelop workflow_id message_id m

do!

clients

.SystemNumberTopic

.PublishAsync(envelope)

:> Task

| Send(GenerateTrafficFineManualIdentificationCode m) ->

let envelope = envelop workflow_id message_id m

do!

clients

.ManualIdentificationCodeTopic

.PublishAsync(envelope)

:> Task

| Send(IssueTrafficFine m) ->

let envelope = envelop workflow_id message_id m

do!

clients

.IssueTrafficFineTopic

.PublishAsync(envelope)

:> Task

| _ ->

failwith

"%A command has not been implemented."

command

}

The working assumption is that some subscription mechanism is going to deliver the relevant workflow commands to the handle function above. This would involve translating some of those workflow events back into the imperative - another design choice, really depends on what you like to read and write. The handle function takes the topics to publish to, called clients here, as a dependency, along with the workflow's identity, the workflow command's message identity and the actual workflow command, and routes each message to the corresponding topic. Note that there's no reason to put all of a workflow's command processing in one function nor to publish messages on a topic. These are all implementation choices and for illustration purposes only.

It's important that, whatever you do, the workflow identity flows along. Performing the GenerateTrafficFineSystemNumber command is what causes the TrafficFineSystemNumberGenerated event, same for GenerateTrafficFineManualIdentificationCode causing TrafficFineManualIdentificationCodeGenerated. The event needs to make its way back to our workflow. But which workflow? That's the purpose of the workflow identity, it acts as a correlation identifier.

It goes without saying that keeping track of the position you've reached in a catch-up subscription in a durable fashion matters. Not the kind of data you can afford to lose, if you catch my drift. There's always some of that at play, meaning that in the absence of a distributed transaction across the resource that stores the position and the resources that you interact with to perform the workflow command, the workflow command's message identity is your friend. It can help deal with idempotency. For persistent subscriptions things are subtly different, in that which workflow commands we've already observed is managed for us, but the chance of observing the same workflow command more than once is still present.

If you're into Event Modeling, you could use the Automation Pattern instead to carry out the workflow commands, using a todo list in the middle. This in turn would allow you to do things like batch executing commands across workflows, e.g. if what you're calling is costly.

I don't want to dwell too much on the implementation of an outbox nor on how messages make their way (back) to a workflow. These are solved problems and the implementation is highly contextual.

Look! No events!

There's a variation on this pattern that foregoes the use of event sourcing or an event store for that matter(*). If we draw inspiration from The Elm Architecture, it's not that far of a stretch to design the workflow as:

type Workflow<'TInput,'TState,'TOutput> = {

InitialState: 'TState

Decide:

'TInput

-> 'TState

-> ('TState * WorkflowCommand<'TOutput> list)

}

Here, the decide function takes an input message and a state, and produces a tuple of the new state and the decisions taken. No events in sight. Applied to our workflow it may look like this:

module IssueTrafficFineForSpeedingViolationWorkflow =

let decide message state =

match (message, state) with

| PoliceReportPublished m, Initial ->

match m.Offense with

| SpeedingViolation _ ->

(AwaitingSystemNumber {

PoliceReportId = m.PoliceReportId },

[ Send(

GenerateTrafficFineSystemNumber {

PoliceReportId = m.PoliceReportId }) ])

| _ -> (Final, [ Complete ])

| TrafficFineSystemNumberGenerated m,

AwaitingSystemNumber s ->

(AwaitingManualIdentificationCode

{ PoliceReportId = s.PoliceReportId

SystemNumber = m.Number },

[ Send(

GenerateTrafficFineManualIdentificationCode

{ PoliceReportId = s.PoliceReportId

SystemNumber = m.Number }

) ])

| TrafficFineManualIdentificationCodeGenerated m,

AwaitingManualIdentificationCode s ->

(Final,

[ Send(

IssueTrafficFine

{ PoliceReportId = s.PoliceReportId

SystemNumber = s.SystemNumber

ManualIdentificationCode = m.Code }

)

Complete ])

| _, _ ->

failwithf "%A not supported by %A" message state

Effectively, we've merged the behavior of the decide and evolve function. Imagine we're using a relational database to persist the workflow state and commands. We can do that in one transaction and use the outbox pattern for the workflow commands. There's not a lot of difference to it, is there?

(*) What if I told you, you could use the event sourced version above instead and still be able to persist the workflow state and commands. All you're sacrificing in the end is remembering the past events. You would not know how you got to the workflow's current state, you'd only know what the workflow's current state is. When decisions come out of the workflow's decide function, you can translate them into events, feed them to its evolve function, and out would come the next state to be persisted alongside the commands. In effect, from the workflow authoring perspective, it does not materially matter how or what you persist. Only whether you use the decide and evolve functions separately or combine them into one function.

Conclusion

The main thing I want you to take away from this post is the ease of authoring a workflow, how state machines play a supporting role, what an event sourced workflow could look like, and a glimpse at the mechanics. I've not gone into what transactions look like, how scheduling messages to oneself would work, how we'd tame the dragon called versioning, nor how monitoring and telemetry fit into all of this. I've been sitting on this for some time now and felt that it was better to publish as is and tackle those bits in a subsequent post.

Credits

Some astute reader will notice a striking resemblance with the work that Jérémie Chassaing has been putting forth. I've adopted most of the terminology where I could. His work is at a higher level of abstraction and has broader applicability than what I'm proposing here. I'm probably straying here and there, as I didn't come to the workflow pattern thru the lense of the decider pattern.

The workflow command names used here were heavily inspired by what NServiceBus offers via its message handler context. In a way, you could say that I've turned the methods into messages, channeling my inner Alan Kay.

Special thanks to Antonios Klimis and Oskar Dudycz for taking the time to review.